Provide foundation for interactive content on secondary screens/devices

#11

Posted 27 December 2017 - 08:17 AM

Static content is provided using the GET method of HTTP while dynamic content will be delivered with the POST method. The POST method will have the ability to service various APIs that the Open Rails community defines. API will be both read and read/write (in terms of ORTS data). API Data provided by the WebServer is delivered in JSON format, which can be acted upon in the client with JavaScript and/or jQuery. Communication with the server for API data will typically be performed using Ajax on the client side.

While James has talked about pushing data to the client, my conception is let the client handle all requests and not burden the server with maintaining the client connection. My conception is to keep the the Server processing as minimal as possible. Of course, that’s up for discussion.

While the implementation is preliminary and there is much testing to be done, I felt that input from the community at this point would be important, before I get too far along in the event that changes need to be made at the low level. Being in the preliminary stages, it will be easier to change directions if my conception differs from the rest of the community.

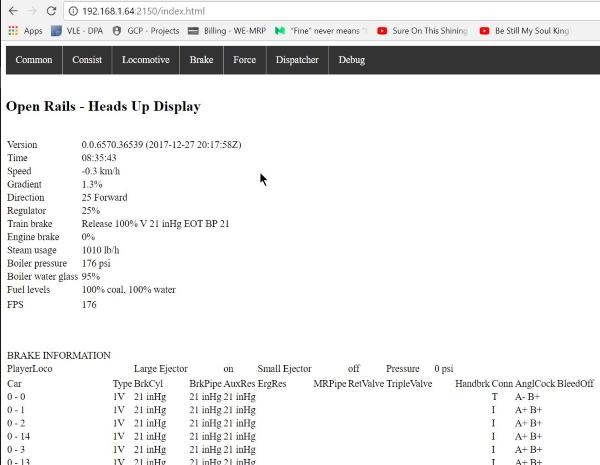

I have currently implemented one API that delivers the HUD window(s) to the Browser. The output is very similar to the HUD windows from within Open Rails. The client side utilizes HTML, CSS and JavaScript to display the data received from the WebServer. It does not include the graphical elements the of HUD windows yet. My first impression is that the display on the browser could use with some reformatting. Since the JavaScript requests new data at set intervals, we might want to think about separating the more dynamic data members into a separate region of the page to be updated more frequently and the things that don’t change as often updates less frequently.

What I’d like to do going forward is to:

• Complete the graphical components of the HUD windows

• Implement the remainder of the popup windows

• Work on the ability to display a “Google Maps” type display of the current route

So…… What are your thoughts ?

#12

Posted 27 December 2017 - 08:25 AM

#13

Posted 27 December 2017 - 08:55 AM

Goku, on 27 December 2017 - 08:25 AM, said:

Goku, on 27 December 2017 - 08:25 AM, said:

There are several issues with WebSockets.

1) My understanding is that we are currently constrained to .NET 3.5, WebSockets appears to be only available in .NET 4.5. However, communication could be made at a lower socket level with our own custom protocol. Which brings up the second issue.

2) We want to allow access to the OR data from mobile devices and tablets (ordinary browsers). Not everyone has a second monitor to display a secondary screen, lots of people have a tablet of some sort. HTTP seems the easiest way to provide that functionality. I'm not sure about trying to implement a custom protocol on say an iPad or Android device.

3) So, far the thinking has been to keep the server side processing at a minimum. My client is updating once every 500 milliseconds via JavaScript and that interval seems acceptable, at least for the data in the HUD display. I haven't done any profiling to see how much of a drag that puts on the program. I'm not sure we need to be in 'real' real time.

Why do you feel that Web Sockets is the only acceptable solution for dynamic content, assuming it's not 'real' real time?

#14

Posted 27 December 2017 - 09:18 AM

Quote

I think there are 3rd party libs for c# with WebSocket support.

Quote

All current browsers support WebSocket. That's why it's WebSocket and not just Socket.

https://caniuse.com/#feat=websockets

Quote

If you are thinking only about 500 ms intervals then POST isn't bad. But I think it's wrong assumption. If you are working on good, universal solution, you should consider that some people want 20 ms intervals.

And 20 ms interval is a kill for HTTP requests.

#15

Posted 28 December 2017 - 10:03 AM

I was not familiar with WebSockets. Thank you for bringing it to my attention.

I did a bit of research and testing. In a very un-scientific and not very rigorous test, I found that at ½, 1 and 2 seconds, there is little or no impact on Frames Per Second. On my system, FPS was between 185-200 in all three cases, as well as the case with no load on the WebSerevr at all. When I went down to 20 ms, performance dropped significantly to about 100 FPS. It tells us little about the difference between HTTP POST and WebSockets but does tell us there is no significant effect on performance at the slower intervals. In addition, for the kind of applications we are initially considering, the HUD and popup Windows, 20 ms is way to fast. The values that do change change so fast as to be almost unreadable, for example, the Force HUD window.

I looked at the protocol spec and decided that it would be easy to integrate it into the HTTP Server I’ve already implemented. A WebSocket server depends on an HTTP conversation in order to initiate the WebSocket protocol. In my server, I fork two different paths depending on whether the URI is GET or POST. My WebServer requires an API request to be a POST and to have a URI that starts with “/API/”. I could easily fork a third path when a GET request URI stats with “/API/”, thus a fork for WebSockets requests. The infrastructure for WebSockets is there, if and when we need it.

My programming philosophy has always been to start small and implement a step at a time, even if it requires reworking the code. (now, I guess they call it refactoring – seems we did all the cool stuff years ago, just didn’t have a name for it).

Can you think of any applications where we might need 20 ms communications?

Thanks again for bringing WebSockets to my attention.

Dan

#16

Posted 30 December 2017 - 01:42 AM

The magic thing about the data provided by the webserver is that anyone with webskills can produce (and share) their own webpages to show what they want to see organised in a way to suit themselves.

I made an extension recently for a French member who needed the cab instrument values extracting to drive his hardware. My simple scheme worked but was primitive and not worth including in OR. Thanks to HighAspect, I can now extend OR with an API to extract those cab instrument values and they can be displayed on a second screen/device any way you want.

#17

Posted 30 December 2017 - 02:02 AM

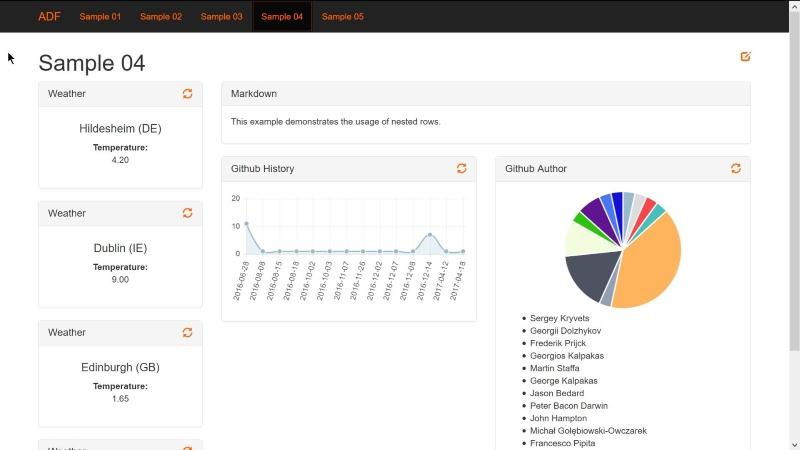

For showing the pop-up windows F1, F4 etc., we could make use of a "dashboard" such as this one.

It's all coded in JavaScript to run in a browser, shows text, links, and charts and data points that update. It's also configurable - by clicking the Edit icon (top right) you can re-arrange the panels and add new ones to suit you (just as you do with the F1, F4 pop-ups).

As soon as the webserver is available to provide all the data, then anyone can adapt a dashboard like this and publish it in the File Library.

#18

Posted 02 April 2018 - 11:16 AM

Here is a photo of 3 browsers showing one of his HUD pages displayed (clockwise from top):

- on a second monitor on the PC which is running Open Rails

- on a laptop with a WiFi connection to the LAN

- on a tablet with a WiFi connection to the LAN

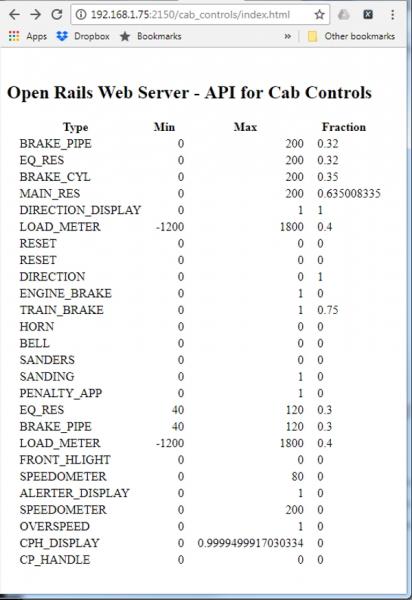

I'm now trying to code an API for myself. This will publish all the cab controls, eg Speedometer, Train Brake etc. onto the secondary device.

#19

Posted 04 April 2018 - 08:14 AM

cjakeman, on 02 April 2018 - 11:16 AM, said:

cjakeman, on 02 April 2018 - 11:16 AM, said:

Had some success. Here is a webpage showing the data:

The webpage above is just a way to test the new API. The aim of this API is to provide the data needed to drive real cab instruments as used by member BB22210 (as in this still from a video):

Now that I have learned to make an API, I'm wondering what APIs Open Rails users would like to ship with the next version. I had thought maybe a gradient indicator, but I'm open to suggestions . . .

#21

Posted 04 April 2018 - 09:30 AM

Some pretty heady stuff - that really opens a big door for some major improvement - thanks so much for the hard work... For testing any instrumentation you feel like porting would be a great proof of concept... Going back to my Flight Sim roots - I've grown very accustom to using my iPad as an auxiliary device offloading some of the functionality we used to have to get off the main PC screen (blocking the view)... Personally - I'd love some of that steam locomotive HUD info available on my iPad...

I'm still hoping for some real Direct X joystick support before we get into all those custom controls... I'm not sure how you plan for those custom controls to connect to the sim but in Flight Simulator most 3rd party custom controls (Home Cockpit Hardware - a big step above your typical joystick)use USB/DirectX and SimConnect/FSUIPC... Some home cockpits cost more than a real plane...

LOL - and the TrackIR we've already chatted about...

Regards,

Scott

#22

Posted 04 April 2018 - 09:50 AM

scottb613, on 04 April 2018 - 09:30 AM, said:

scottb613, on 04 April 2018 - 09:30 AM, said:

Dan's already proven the HUD, so that will be included in the first release.

scottb613, on 04 April 2018 - 09:30 AM, said:

scottb613, on 04 April 2018 - 09:30 AM, said:

Did you know that Open Rails already has a mechanism for controlling things? The Replay option is just a sequence of commands that replicates the user's input. I expect we could use that to interpret commands received using HTTP:POST.

scottb613, on 04 April 2018 - 09:30 AM, said:

scottb613, on 04 April 2018 - 09:30 AM, said:

Not forgotten about that :-)

#23

Posted 04 April 2018 - 12:14 PM

Looking at a potential feature list today, yeah, I suppose any textural information OR can produce can be shipped off to this interface. That's really great!

I don't know much about html coding, so please excuse my ignorance:

- Will the OR team stop at the server side output or will you guys also be providing some client side displays?

- Are you using .css coding so information can be displayed on displays that have substantially different sizes?

- Can the f4 and f8 displays be pushed thru this interface?

- Can the interface handle two way communications (e.g., if the f8 display goes out can a finger touch on a smartphone send back the command to throw the switch?

- One of the f5 windows shows brake parameter values but there is never enough screen height to show more than about 40 items. Can we see the data for the whole train (scrolling) via this interface?

- Can the Activity file "point" to media files for display/playing of audio via this interface? I'm thinking of links being displayed in some cases, actual display/playing in others. For example, a select-able link to show an image of where to spot a car is an optionally used bit of information; An audio file from the dispatcher saying "Extra 2475 take the siding at Jonestown for a meet w/ Train 246" is not optional with both being handled as something the software should send over the interface when the players train reaches a pre-defined location.

In short, to make the second device, whatever it is, the superior version of display for conveying information and receiving commands from the player. Not exclusively, not required for some things, but simply a superior UI by virtue of having an html display that is available to whomever wants to use it.

Any guess as to when we end users will be able to try this neat stuff?

#24

Posted 04 April 2018 - 01:50 PM

Genma Saotome, on 04 April 2018 - 12:14 PM, said:

Genma Saotome, on 04 April 2018 - 12:14 PM, said:

And that's a great "use case". A number of people have asked for these messages to be removed and currently we have an option to suppress most of them. It would make sense to be able to divert them.

Genma Saotome, on 04 April 2018 - 12:14 PM, said:

Genma Saotome, on 04 April 2018 - 12:14 PM, said:

One of the positives is that anyone with Web skills can provide a client-side display. Our focus ought to be on providing useful APIs which the client (secondary device) can use. We will provide some though. Dan was thinking of maps.

Genma Saotome, on 04 April 2018 - 12:14 PM, said:

Genma Saotome, on 04 April 2018 - 12:14 PM, said:

Dan's initial version serves CSS files and also HTML, JavaScript, text, XML, JSON and images (GIF, JPEG, PNG, ICO).

Genma Saotome, on 04 April 2018 - 12:14 PM, said:

Genma Saotome, on 04 April 2018 - 12:14 PM, said:

Yes.

Genma Saotome, on 04 April 2018 - 12:14 PM, said:

Genma Saotome, on 04 April 2018 - 12:14 PM, said:

Not tried that yet, but definitely possible and the existence of Replay should make that easier.

Genma Saotome, on 04 April 2018 - 12:14 PM, said:

Genma Saotome, on 04 April 2018 - 12:14 PM, said:

Indeed you can. That's a real bonus.

Genma Saotome, on 04 April 2018 - 12:14 PM, said:

Genma Saotome, on 04 April 2018 - 12:14 PM, said:

That's a neat idea. No reason why we couldn't serve audio and video too (might slug the frame rate a bit) but the interesting concept is embedding triggers in an activity which will cause a browser to fetch pages with links, images and audio.

Genma Saotome, on 04 April 2018 - 12:14 PM, said:

Genma Saotome, on 04 April 2018 - 12:14 PM, said:

I'm guessing at a month from now.

P.S. Thanks for suggesting this idea all that time ago !

#25

Posted 04 April 2018 - 05:27 PM

Log In

Log In Register Now!

Register Now! Help

Help